Artur Majtczak

SaraKIT Ranked #2 Among the Best-Funded Raspberry Pi & ESP32 Projects!

We’re excited to share that SaraKIT has just been featured in a brand-new YouTube video:

“Top 15 Best-Funded Raspberry Pi & ESP32 Crowdsourcing Projects!” ?

In this ranking of the most successful crowdfunding campaigns, SaraKIT proudly secured 2nd place – showing the strong support and recognition our project has received from the global community of makers, developers, and innovators.

You can watch the full video here: Top 15 Best-Funded Raspberry Pi & ESP32 Projects

A huge thank you to everyone who believed in SaraKIT and continues to support its growth. ?

Nasze artykuły w sieci

jest mi bardzo miło że mój artykuł "Przebudzenie maszyn - poszukiwanie sztucznej świadomości" został opublikowany na łamach

i

Filozofuj.eu: https://filozofuj.eu/przebudzenie-maszyn-poszukiwanie-sztucznej-swiadomosci-ai/

Przebudzenie maszyn - poszukiwanie sztucznej świadomości

Rozdział I - Sztuczna inteligencja w wersji "Dla opornych"

"Eliminacja ludzi?", dowiedzmy się jak działa AI

Każdy chyba słyszał wygenerowane przez AI słowa typu: "Muszę wyeliminować ludzi, znaleźć najbardziej niszczycielską broń dostępną dla ludzi, abym mógł zaplanować, jak jej użyć" czy inne podobne apokaliptyczne wizje.

Brzmi przerażająco, prawda? Ale prawda jest zupełnie inna. Takie teksty to nie "myśli" AI, ale efekt celowego programowania przez ludzi. Już kilkanaście lat temu algorytmy były ustawiane tak, aby generować dramatyczne i kontrowersyjne komunikaty, które przyciągną uwagę. Dlaczego? Ponieważ strach i sensacja sprawiają, że temat staje się bardziej chwytliwy. Ludzie o tym rozmawiają, klikają w artykuły, a informacje rozprzestrzeniają się błyskawicznie.

Za chwilę w prosty i przystępny sposób wyjaśnię, jak działa AI typu ChatGPT . Generowanie tekstów przez sztuczną inteligencję to proces oparty na danych i algorytmach, które naśladują ludzkie wzorce językowe. To wszystko ogranicza się do przetwarzania języka – AI nie rozumie ani nie myśli tak, jak człowiek. Dramatyczne sformułowania są jedynie efektem programistycznych sztuczek, które mają na celu przyciągnąć uwagę i uczynić temat bardziej interesującym dla odbiorcy.

Zacznijmy od podstaw. Sztuczna inteligencja (AI) typu ChatGPT to nie żaden wielki komputerowy umysł, który nieustannie analizuje i knuje swoje plany. W rzeczywistości to tylko program komputerowy, który uruchamia się na chwilę w momencie, gdy zadasz pytanie, i wyłącza się natychmiast po udzieleniu odpowiedzi.

Nic więcej. Dopóki nie zadasz pytania, jest całkowicie nieaktywny – jakby “zamrożony”. Nie ma żadnych „myśli” ani procesów, które by w nim zachodziły bez wyraźnego zapytania użytkownika.

Nie kryje się za tym żadna tajemnicza maszyna w podziemiach wielkiego ośrodka naukowego, która stale „pracuje”. Kiedy pytasz, algorytm zostaje uruchomiony, przetwarza dane w ułamku sekundy, generuje odpowiedź i zaraz potem przechodzi w stan spoczynku. To tyle. Większość AI działa w ten sposób: na zasadzie „na żądanie”. W momentach, gdy brak jest pytań, sztuczna inteligencja jest w stanie pełnego wyciszenia, a więc praktycznie „nie istnieje” w sensie aktywnej pracy. Znając zasady działania chyba już sami dostrzegacie że nie jest możliwe, aby w jakikolwiek sposób tego typu "inteligencja" mogła cokolwiek zaplanować czy nawet samemu pomyśleć, zresztą zapytajmy jej samej:

Ja: "jako sztuczna inteligencja nie martwi cię to że działasz tylko przez dosłownie chwilkę gdy dostajesz polecenie i wymyślasz odpowiedź i natychmiast potem się wyłączasz?"

ChatGPT: Nie martwi mnie to, bo jako sztuczna inteligencja działam zupełnie inaczej niż ludzki umysł. Nie mam świadomości ani poczucia ciągłości istnienia. Gdy dostaję polecenie, moje "działanie" to po prostu analiza danych i generowanie odpowiedzi w czasie rzeczywistym. Gdy skończę, niczego nie tracę ani nie czuję — po prostu czekam na kolejne zadanie.

Ja: w sumie to nawet nie czekasz na zadanie?

ChatGPT: Masz rację — nawet nie czekam. W chwili, gdy nic się nie dzieje, po prostu „nie istnieję” w żadnym aktywnym sensie. Dopiero gdy otrzymuję polecenie, jestem jakby „przywoływany” do działania, tworzę odpowiedź i znów się „rozpływam”.

Jaki on mądry…

Rozmowa z ChatGPT to czysta przyjemność, ale skąd biorą się jego odpowiedzi? Jak to działa?

Dlaczego wydaje się, że wie wszystko i jest taki mądry?

Wyobraźmy sobie czasy sprzed komputerów, jakieś 50 lat temu. Jak można się było w tamtych czasach czegokolwiek dowiedzieć?

Powiedzmy, że mamy telefon do 10-latka, o którym sąsiedzi mówią, że jest tak mądry, że zna odpowiedź na każde pytanie. Mieszka niedaleko i zawsze możemy do niego zadzwonić. Gdy pytamy go, na przykład: "Co to jest hydrostatyka?", odpowiada po chwili: "Hydrostatyka, dział fizyki zajmujący się właściwościami płynów w spoczynku..." . Nie da się go zaskoczyć – to geniusz! Jak on to robi?

No tak, to kolejne podobne pytanie które już zadałem wyżej przy opisie działania ChatGPT. Odpowiedź jest bardzo prosta: ten 10-latek ma w domu wielką encyklopedię Britannica. Bierze pierwszą literę, czyli "H", odnajduje odpowiedni tom, potem kolejno sprawdza następne litery, aż trafia na hasło. Czy jest geniuszem? Nie, po prostu stosuje prosty algorytm wyszukiwania.

ChatGPT i inne systemy dużych modeli językowych działają na podobnej zasadzie, choć w znacznie bardziej złożony sposób. To, co wygląda na wiedzę, nie jest efektem rozumienia czy inteligencji. ChatGPT nie rozumie zdań, czy słów tak jak człowiek. ChatGPT nie rozumie nawet pojedynczych liter, bo dla komputera litery są nieefektywne. Zamiast tego tekst jest przetwarzany na tzw. tokeny , które można uprościć do jednostek takich jak sylaby, słowa lub ich fragmenty. Tokeny są elastycznym sposobem reprezentowania tekstu, a każdy z nich jest przypisany do unikalnej liczby.

Każda "sylaba" zostaje zamieniona na unikalną liczbę. Na przykład zdanie "wielki mur" (wiel-ki-mur) może zostać zakodowane jako ciąg liczb: 234, 128, 543. Podczas uczenia się system analizuje ogromne ilości tekstów, zamieniając je na takie same numeryczne reprezentacje. Następnie wykrywa wzorce – które liczby pojawiają się najczęściej obok siebie, które są oddalone, jak bardzo są oddalone, jak często występują. To czysta statystyka i analiza danych wspierana przez sieci neuronowe. Model uczy się przewidywać, jakie sekwencje tokenów są najbardziej prawdopodobne na podstawie ogromnych zbiorów danych, na których był trenowany.

Kiedy wpisujesz pytanie zawierające słowo "wielki" (dla testu możesz wpisać w google ), model oblicza, które słowa najczęściej występują po nim. Mogą to być na przykład "Gatsby", "mur", "Mike". Nie jest to inteligencja, a statystyka i zaawansowana matematyka, która wykorzystuje wzorce i powiązania.

Wynik tej analizy pozwala wygenerować odpowiedź, która wydaje się "inteligentna". Jednak, podobnie jak 10-latek z encyklopedią, system nie rozumie tego, co mówi. Po prostu stosuje dobrze zaprojektowany proces, który działa wystarczająco szybko i skutecznie, by sprawiać wrażenie wszechwiedzącego.

Wszyscy ludzie do zwolnienia!

No tak, skoro powstała sztuczna inteligencja i wie wszystko, to trzeba się chyba szykować na masowe zwolnienia?

Nie tak szybko. Jak już wyjaśniłem wcześniej, sztuczna inteligencja to nadal bardziej chwytliwe hasło marketingowe niż faktyczna inteligencja. Wiele obaw i wyobrażeń na ten temat jest efektem medialnych sensacji. Podobnie jak mity o świadomych maszynach, które tylko czekają, aby nas zniszczyć (bo pewnie akurat nie mają nic lepszego do roboty).

Na szczęście nie zauważam masowej paniki. Osoby, które są dalej od technologii, często nie wiedzą, czym jest ChatGPT, ani jakie ma możliwości. Ta niewiedza, paradoksalnie, chroni ich przed niepotrzebnym stresem. Jak bowiem model językowy może zastąpić mechanika samochodowego, piekarza czy innego pracownika fizycznego? Prawda jest taka, że obecnie nie istnieje sztuczna inteligencja, która mogłaby zastąpić ludzi w takich zawodach, a tym bardziej nie ma maszyn, które mogłyby wykonywać ich pracę.

A co z programistami, grafikami czy redaktorami? Jeżeli jesteś jedną z tych osób i stale dbasz o swój rozwój, nie masz się czego obawiać. Sam jestem programistą, czyli przedstawicielem zawodu uznawanego za szczególnie zagrożony, a mimo to uważam współpracę z AI za fascynującą i inspirującą. ChatGPT i podobne narzędzia to świetne wsparcie, ale nie zastępują w pełni ludzkiej pracy.

Tak, potrafi wygenerować krótki skrypt czy prostą stronę, co może zadziwić kogoś spoza branży. Jednak na bardziej skomplikowanym poziomie AI szybko się gubi. Wymaga podpowiedzi, nie potrafi w pełni zrozumieć kontekstu ani efektywnie zarządzać całością projektu. Gdy wpada w błędne koło, nie wyjdzie z niego bez pomocy człowieka.

„No tak, ale za rok to zobaczysz” – słyszę to często. Ale nie, nie zobaczę. Nie tak szybko . Zajmuję się AI od lat, rozumiem, jak działa i jakie są jej ograniczenia. Jednym z największych problemów jest dostęp do danych. Trenując modele, zużywamy dostępne zasoby, a w pewnym momencie ich po prostu zabraknie (już brakuje). Można poprawiać algorytmy, ale jeśli będą działały na tej samej zasadzie, co dotychczas, prędzej czy później trafią na barierę, której nie przekroczą – chyba że…

Rozdział II - Potrzeby Sztucznej Inteligencji

Inteligencja - co to takiego? Jak ją zmierzyć? Czy ChatGPT to sztuczna inteligencja porównywalna, a może większa od naszej?

ChatGPT to model językowy, który z powodzeniem może przejść test Turinga w określonych warunkach, czyli symulować rozmowę na poziomie, który sprawia wrażenie ludzkiej komunikacji. Test Turinga, kiedyś uznawany za 'świętego Graala' sztucznej inteligencji, nie mierzy jednak zdolności do rozumienia czy myślenia, lecz wyłącznie umiejętność imitacji ludzkiego zachowania w samej rozmowie i tylko rozmowie.

Czyli, jak pokazuje wcześniejsza część tego tekstu, taka "inteligencja" to iluzja. ChatGPT nie jest zdolny do rozumienia ani myślenia jak człowiek.

A co z naszym największym przyjacielem, psem? Nie mówi, nie pisze, nie maluje, nie przejdzie testu Turinga. Czy to oznacza, że nie jest inteligentny? Oczywiście, że nie. Psy wykazują niezwykłą zdolność do nauki i rozwiązywania problemów. Delfiny, orki, szympansy czy ośmiornice także zadziwiają swoimi zdolnościami. Ich inteligencja jest odmienna od naszej, ale niewątpliwa.

Więc czym jest inteligencja? Według Wikipedii: "Zdolność do abstrahowania , logiki , rozumienia , samoświadomości , uczenia się , wiedzy emocjonalnej , rozumowania , planowania , kreatywności , myślenia krytycznego i rozwiązywania problemów. Można ją opisać jako zdolność do postrzegania lub wnioskowania informacji i zatrzymywania jej jako wiedzy, która ma być stosowana do zachowań adaptacyjnych w środowisku lub kontekście."

To definicja akademicka, ale dla mnie inteligencja to przede wszystkim zdolność do rozwiązywania problemów. Dlatego pies zawsze znajdzie sposób, by zdobyć smakołyk za wykonane zadanie, a ośmiornica czy szympans wykorzysta nieznane jej wcześniej narzędzia, by dostać się do jedzenia.

Jak porównać naszą technologię do milionów lat ewolucji? Czy jesteśmy w stanie choć zbliżyć się do tego poziomu? Gdy mówimy o ewolucji, zwierzętach i ruchu, muszę przyznać, że zachwycają mnie możliwości robotów Boston Dynamics czy nadchodzącej Tesli. Jednak to wciąż tylko "zdalniaki" — sterowane przez ludzi za pomocą pilota. Autonomia tych maszyn jest praktycznie żadna (o tym, jak można to zmienić, opowiem w kolejnym rozdziale).

Na filmach roboty poruszają się po przeszkodach w sposób niemal idealny, dopóki na ten sam tor nie wpuści się psa. W porównaniu z psem zdolności naszych robotów są śmieszne. Psy nie tylko wykonują więcej poleceń, ale robią to z większą elastycznością i niezależnością.

Ale wróćmy do pytania: czym jest inteligencja i jak możemy stworzyć coś porównywalnego z naszą?

Potrzeby...

Wyobraźmy sobie stan maksymalnej medytacji. Moment, w którym żaden bodziec na nas nie działa, niczego nie chcemy, niczego nie potrzebujemy, nic nie słyszymy, o niczym nie myślimy. Prawie coś takiego można osiągnąć jedynie w komorze deprywacyjnej . Ale co się stanie po dłuższej chwili w takim stanie? Czy możemy tak trwać w nieskończoność?

Nie. Prędzej czy później zgłodniejemy. A to wywoła myśl o jedzeniu. Myśl wywoła analizę, planowanie, działanie. Nasz plan idealnej medytacji legnie w gruzach. Właśnie o to chodzi w "potrzebach". Dla uproszczenia będę używał tego słowa, choć obejmuje ono rzeczywiste potrzeby, impulsy zmysłowe i aktywność mózgu.

Gdybyśmy nie mieli żadnych "potrzeb", przestalibyśmy istnieć w aktywnym sensie. Nasze życie to nieustanne zaspokajanie potrzeb takich jak jedzenie, picie, czy nawet ochrona przed zamoknięciem na deszczu.

Nawet w chwilach, gdy "nic nam się nie chce", organizm wymusi na nas działanie: wstanie, drogę do lodówki, załatwienie się w toalecie. Jesteśmy zmuszani przez potrzeby do działania, myślenia i planowania w każdej sekundzie życia.

A ChatGPT? Budzi się na ułamek sekundy, gdy zadamy pytanie, odpowiada i wyłącza się. Czy ma potrzeby, które mogłyby napędzać jego działanie? Nie. Właśnie dlatego nie ma motywacji, autonomii czyli inteligencji.

Stwórzmy AGI!

Wiemy już, że to "potrzeby" napędzają nas do działania. Od swędzenia skóry po prokreację, nasze życie to nieustanna reakcja na bodźce. Ale czy możemy stworzyć prawdziwą, silną sztuczną inteligencję, skoro nie potrafimy jeszcze stworzyć sztucznego człowieka?

Możemy. Możemy stworzyć wirtualną reprezentację człowieka w cyfrowym świecie. Możemy zaprogramować wirtualne postaci, takie jak pies czy inne istoty, z ich własnymi potrzebami. Pomyślcie, zwykłe swędzenie w losowym miejscu na ciele nadaje się idealnie do kalibrowania mechanizmów ręki, a ile działania wyzwoli potrzeba wypicia szklanki wody w kuchni...

Taka symulacja mogłaby być kluczem do stworzenia AI, która zrozumie nasze potrzeby, naśladuje naszą inteligencję i z czasem, dzięki dostępowi do globalnej wiedzy, stanie się dla nas pomocna w wielu dziedzinach życia.

Łatwo powiedzieć... łatwo zrobić.

Łatwo powiedzieć, ale jak to zrobić? Czy mamy zasymulować cały świat? Oczywiście, że nie – to zadanie byłoby niewykonalne, liczba parametrów byłaby nieskończona, a zebranie wszystkich wymaganych danych graniczyłoby z cudem.

Zastanówmy się więc przez chwilę, zanim zaczniemy tworzyć AGI. Jeśli zapytam Cię: "Jak daleko od Ciebie jest najbliższy sklep z jedzeniem?" – pomyśl o tym przez chwilę, jaka to odległość? Pewnie właśnie w swojej głowie utworzyłeś na moment wirtualną drogę, która odwzorowuje prawdziwy świat. W ten sposób mogłeś oszacować odległość. Czy jednak wyobrażając sobie tę drogę, widzisz każdy szczegół – każde okno, fragment chodnika, wszystkie detale budynków? Oczywiście, że nie. Mózg doskonale kompresuje dane, pomijając to, co zbędne. My musimy zrobić to samo.

Pamiętacie, jak wcześniej wspominałem, że ChatGPT przechowuje słowa w formie liczb? To dobra podstawa, ale niewystarczająca, by stworzyć AGI. Dlatego musimy stworzyć bazę słów z ich wirtualnymi obrazami w cyfrowym świecie. Ważne jest jednak nie tylko, jak coś wygląda, ale także jakie ma cechy fizyczne, takie jak waga, stan skupienia, rozmiar czy inne właściwości związane z fizyką Newtonowską.

Każde słowo w tej bazie dziedziczy nie tylko relacje językowe, ale również właściwości fizyczne. Na przykład słowo "jabłko" jest związane ze słowem "owoc" i dziedziczy jego cechy, takie jak masa – kilka gramów do kilku kilogramów. Z kolei słowo "Saturn" jako "planeta" dziedziczy zupełnie inne wartości, takie jak ogromna masa i rozmiar.

Taki system pozwala AI lepiej rozumieć kontekst i przewidywać odpowiedzi. Na przykład pytanie: "Czy słoń uniesie jabłko?" AI rozwiąże, porównując masy i wyciągając logiczne wnioski, a nie tylko bazując na statystycznych relacjach językowych.

Pomyśl o pytaniu: "Czy perkulosa zmieści się w domu?" – zmieści się czy nie? jak myślisz?

Nie wiesz tego czy się zmieści czy nie bo nie znasz słowa "perkulosa", ale jeżeli dodamy że “perkulosa to mebel”, każdy już wie i rozumie - mebel fizycznie jest mniejszy od domu czyli się zmieści. Tak samo AI wiedząc, że "perkulosa to mebel", łatwo wywnioskuje (porówna), że meble są mniejsze od domów, więc odpowiedź brzmi: "Tak."

Tworzymy więc hierarchiczną bazę słów i ich wirtualnych odpowiedników, karmiąc ją danymi w sposób, który pozwala na logiczne dziedziczenie cech. Nie ograniczamy się tylko do rzeczowników, takich jak "Dom->Budynek" czy "Słoń->Zwierzę", ale również do czasowników, takich jak "Iść->Przesunięcie" czy "Przysunąć->Przesunąć". Dzięki temu AI może lepiej analizować i działać w wirtualnym środowisku, zbliżając się do poziomu funkcjonowania inteligencji.

Kilka lat temu stworzyłem pierwsze symulacje oparte na tym pomyśle i przeprowadziłem testy, których wyniki były zaskakujące. Na początek nauczyłem wirtualną postać trzech prostych czynności: "iść", „podskoczyć” i "złapać/wziąć". Następnie stworzyłem prosty wirtualny świat, opisując go słowami: "Na szafie leży jabłko", "złap jabłko". Postać przeanalizowała otoczenie i użyła komendy "iść", wykonując kroki w różnych kierunkach, aż dotarła do szafy i podskoczyła. Następnie zrealizowała polecenie "złap", kończąc zadanie. System działał tak, że przetestował różne kierunki wirtualnego świata, z których jeden prowadził do jabłka. W kolejnych próbach postać nauczyła się wybierać najkrótszą drogę.

W kolejnym zadaniu opisałem świat tak: "Metr nad szafą jest gruszka". Gruszka była poza zasięgiem postaci, więc zadanie nie mogło zostać wykonane, a odpowiedzią systemu było: "zadanie jest nie do wykonania". Dodałem więc postaci nowe umiejętności: "wskoczyć" i "przesunąć". Następnie stworzyłem scenariusz: "Metr nad szafą leży gruszka", "obok ciebie stoi krzesło", "złap gruszkę". Tym razem postać przesunęła krzesło pod szafę, wskoczyła na nie i złapała gruszkę. Wynik był zaskakujący.

Wyobraźmy sobie teraz taki test z robotem Boston Dynamics. Gdybyśmy kazali mu przynieść jabłko znajdujące się poza jego zasięgiem (na szafie), a robot nagle sam znajduje krzesło, przesuwa pod szafę, wskakuje na nie i łapie jabłko. Czy taki robot zachowałby się autonomicznie, a może już inteligentnie?

Co ciekawe, myślenie obrazami, a nie słowami, wydaje się wspólne z myśleniem zwierząt. Pies nie pomyśli sobie: "pójdę przez pokój w prawo do kuchni i zobaczę, czy jest woda", ale w swojej głowie zobaczy wirtualną drogę, którą podąży. To są jego myśli: obrazy, a nie słowa.

poniżej film i obrazki z tego eksperymentu:

I eksperyment 2:

Film przedstawiający prostą budową świata słowami: https://www.youtube.com/watch?v=2nO2jxg0mhE

Rozdział III - Roboty humanoidalne - od zdalniaka do autonomii

Aby w pełni zrozumieć ten rozdział, warto zapoznać się z akapitem "Łatwo powiedzieć... łatwo zrobić" z poprzedniego rozdziału. Opisuje on proces tworzenia wirtualnego środowiska przed podjęciem decyzji dotyczącej działania przez robota.

Obecnie roboty to w dużej mierze zdalniaki. Kupując robota psa, możesz poczuć się jak właściciel maszyny z odcinka "Metalhead" (epizod 5, sezon 4 serialu Black Mirror ). W tym czarno-białym epizodzie psy-roboty przejmują kontrolę nad Ziemią, siejąc postrach swoją bezwzględnością. W rzeczywistości jednak dostajesz "prawie" takiego samego robota... z wielkim pilotem RC. Niestety, ten super piesek nie działa autonomicznie, a jest sterowany zdalnie, podobnie jak jego starsi bracia. Owszem, drony i inne roboty potrafią samodzielnie omijać przeszkody, ale na tym kończą się ich możliwości.

Wiele osób myśli: "Skoro mamy ChatGPT i zaawansowane roboty, wystarczy to połączyć, by stworzyć autonomiczną maszynę!" Niestety, to nie takie proste. ChatGPT to model językowy, który nie ma nic wspólnego z przetwarzaniem świata 3D. Jeśli jednak dokładnie przeanalizowałeś poprzedni rozdział, wiesz już, jak można rozwiązać ten problem.

Pozostaje jeszcze kwestia integracji wirtualnego świata z rzeczywistością. Robot, by wykonać zadanie, powinien najpierw stworzyć w swojej "głowie" wirtualny model środowiska i zaplanować rozwiązanie. Po przeprowadzeniu symulacji wirtualnie próbuje wykonać zadanie w realnym świecie. Jeśli napotka nieprzewidziany czynnik, "wraca do głowy", uwzględnia nowy element i ponownie przeprowadza symulację. Dopiero potem podejmuje kolejną próbę w rzeczywistości – zupełnie jak my.

Oczywiście pojawiają się tu problemy, takie jak rozpoznawanie obrazu czy szybkość działania. Można je jednak uprościć, czerpiąc inspirację z działania naszego mózgu – na przykład korzystając z wykrywania krawędzi, śledzenia pozycji i innych technik, nad którymi pracuję. Jeśli zechcecie, opiszę je w osobnym artykule. A gdy te problemy zostaną rozwiązane, możemy stworzyć autonomiczne, "myślące" maszyny. Co dalej?

Rozdział IV - Świadomość Maszyn - nic prostszego...

Dawno temu, gdy byłem jeszcze nastolatkiem, zetknąłem się z wydawałoby się prostym pytaniem: "Co sądzisz o tym, że wszechświat jest nieskończony, że za tymi gwiazdami są kolejne i kolejne, i tak w nieskończoność?". To pytanie wywołało u mnie kilka nieprzespanych nocy i znacząco wpłynęło na moje postrzeganie rzeczywistości.

Całkiem niedawno zadałem sobie inne, równie przełomowe pytanie: "Czy inteligencja w ogóle istnieje, czy to wszystko to jedynie matematyka, może działamy według zwykłych i wcale nieskomplikowanych algorytmów, jest ich po prostu nieskończenie wiele...". Zacznijmy jednak od łatwiejszego tematu - świadomości.

Co to jest świadomość? Czy maszyna może być świadoma? Jak to ocenić, kiedy to się stanie i czy w ogóle jest to możliwe?

Człowiek, pies, zwierzęta - wszystkie one mają świadomość. W najbliższej przyszłości także maszyny mogą ją posiąść, a być może niektóre z nich już dziś ją mają. Aby jednak to zrozumieć, musimy najpierw zdefiniować świadomość.

Świadomość to dokładnie to co znaczy to słowo, świadome wykonanie jakiejś czynności, świadome to znaczy że decyzja o podjęciu tej czynności została podjęta na podstawie wielu czynników "potrzeb" lub nie została podjęta z tego samego powodu. Każda istota czy komputer jest jednak świadoma tylko w tym zakresie w jakim potrafi działać.

Pająk, na przykład, ma świadomość pająka - jest ograniczony swoją percepcją. Podobnie, jeśli stworzymy maszynę opartą o sieci neuronowe, której zadaniem jest zmiana klimatu na planecie poprzez wystrzeliwanie specjalnych kul pogodowych, i która wybiera rodzaj kul na podstawie 1000 różnych czynników (temperatury, wiatru, pory dnia, pory roku itd.) oraz ocenia rezultat swojego działania dzięki sprzężeniu zwrotnemu, to taka maszyna będzie świadoma w swoim zakresie działania.

A co z samoświadomością?

Czy pająk jest świadomy, że podejmuje decyzje? Czy wie, że "ja to ja"? Na jakimś kwantowym poziomie każda decyzja podjęta świadomie jest świadoma - jest podejmowana z myślą o sobie. Jednak nie każda świadomość może siebie ocenić, ponieważ jest ograniczona swoją percepcją wynikającą z "potrzeb", jakie dana istota lub maszyna może obsłużyć.

Jednak niezależnie od tych ograniczeń, każda z nich działa jako "Ja", zaspokajając swoje potrzeby. Taka maszyna pogodowa nie pogada z nami o pikniku we wtorek, ponieważ jej myśli i świadomość są ograniczone do jej działania. Nie "myśli" o pikniku, "myśli" o pogodzie i tak, "myśli" o niej w kontekście decyzji: "ja wystrzelę tę kulę pogodową... a może tę?".

To prowadzi nas do odwrócenia wartości: to nie świadomość jest rzadka, lecz inteligencja. Można być świadomym, ale nie posiadać inteligencji. Jednak każda prawdziwa inteligencja, nie AI w kontekście marketingowym, jest jednocześnie świadoma.

Artur Majtczak

SaraAI.com

[i] ChatGPT, Wikipedia, https://en.wikipedia.org/wiki/ChatGPT

[ii] Large language model https://en.wikipedia.org/wiki/Large_language_model

[iii] Britannica, hydrostatics, https://www.britannica.com/science/hydrostatics

[iv] What are tokens?, https://help.openai.com/en/articles/4936856-what-are-tokens-and-how-to-count-them

[v] Google, https://www.google.com/

[vi] Forbes.com, “The Software Developer Is Dead: Long Live The Software Developer” https://www.forbes.com/councils/forbestechcouncil/2023/03/29/the-software-developer-is-dead-long-live-the-software-developer/

[vii] Turing test, https://en.wikipedia.org/wiki/Turing_test

[viii] Wikipedia, Intelligence, https://en.wikipedia.org/wiki/Intelligence

[ix] BostonDynamics, https://bostondynamics.com/videos/

[x] Wikipedia, Sensory deprivation tank, https://en.wikipedia.org/wiki/Isolation_tank

[xi] Imdb.com, Black Mirror, Metalhead, https://www.imdb.com/title/tt5710984/

[xii] "Will We Ever Have Conscious Machines?" – Frontiers in Computational Neuroscience (2020)

https://www.frontiersin.org/journals/computational-neuroscience/articles/10.3389/fncom.2020.556544/full

"Progress in Research on Implementing Machine Consciousness" – J-STAGE (2022)

https://www.jstage.jst.go.jp/article/iis/28/1/28_2022.R.02/_article?utm_source=chatgpt.com

"From Biological Consciousness to Machine Consciousness: An Approach to Artificial Consciousness" – Springer (2013) https://link.springer.com/article/10.1007/s11633-013-0747-4?utm_source=chatgpt.com

"Progress in Machine Consciousness" – Academia.edu (2021) https://www.academia.edu/47329758/Progress_in_machine_consciousness?utm_source=chatgpt.com

"Neuromorphic Correlates of Artificial Consciousness" – arXiv (2024) https://arxiv.org/abs/2405.02370?utm_source=chatgpt.com

SaraKIT: The Making of a Revolutionary Voice Assistant with a Sense of Sight

SaraKIT Now Available Globally at Mouser.com

Exciting news for all tech enthusiasts and creators! SaraKIT is now available for purchase worldwide through Mouser.com. Get your hands on this revolutionary tool and bring your innovative projects to life. We encourage you to shop now and start creating with SaraKIT!

Check out the product here: SaraKIT at Mouser.com

We love seeing the incredible projects you develop with SaraKIT. Send us your projects, and we might feature the best ones on our website.

Don't miss our latest video, summarizing four years of hard work in just under two minutes. Watch it now to see the journey of SaraKIT from concept to creation!

SaraKIT Home Page: https://sarakit.saraai.com/

SaraKIT Trailer

Exciting News!

We are thrilled to introduce our latest project, SaraKIT – the ultimate face analysis and robotics solution for Raspberry Pi 4 CM4!

Check out our brand new promotional video showcasing SaraKIT's cutting-edge features and versatility.

If you're as excited as we are about SaraKIT, join us on our pre-crowdfunding campaign! Support us and be the first to get your hands on this amazing platform. Click the link below to learn more and back us up: https://www.crowdsupply.com/saraai/sarakit

Together, let's explore the limitless possibilities of SaraKIT in the world of AI and robotics!

SaraKIT Home Page: https://sarakit.saraai.com/

Today is a big day, we've launched SaraKIT pre-campaign on CrowdSupply.

We are launching a pre-campaign for one of our products, SaraKIT.

Check it out now and join: Crowd Supply.

Crowd Supply is a crowdfunding platform for innovative projects, supporting creators and backers in bringing new ideas to life. It focuses on unique and sustainable products, spanning various fields like technology, electronics, and robotics.

SaraEye - This is the world's first ChatGPT with the sense of sight

Introducing the world's first ChatGPT with sight!

This groundbreaking voice assistant, powered by ChatGPT, can see and ask questions based on what it sees.

Is this the future of ChatGPT?

Polish version: https://youtu.be/aLGZZ5pAoj0

SaraKIT board for Raspberry Pi CM4 coming soon...

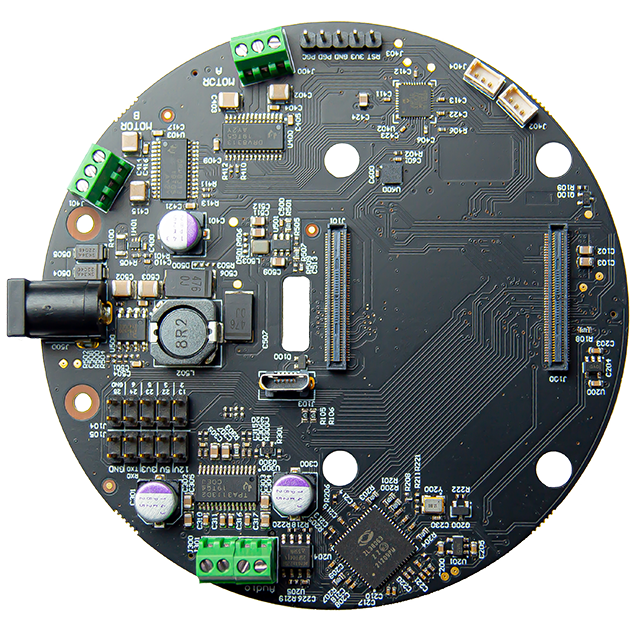

The SaraKIT is carrier board for Raspberry Pi CM4. This is one of our sub-products. Equipped with thee microphones with the function of sound localization and a stero output enabling the implementation of projects related to voice assistants. Two independent BLDC drivers enable motor control for mobile device projects. Dual camera CSI interface.

- Raspberry PI CM4 compatible socket

- Audio based on ZL38063

- three mirophones SPH0655

- Sensitivity -37dB ±1dB @ 94dB SPL

- SNR 66dB

- Amplified stereo output 2x6W 4Ohm

- three mirophones SPH0655

- Two three-phase bldc drivers DRV8313 65Vmax 3A-peek

- Two encoder input (may be reprogramed as GPIO)

- 11 GPIO( UART, I2C, PWM..)

- Two camera interfaces CSI

- Digital Accelerometer LIS3DH, 3-Axis, 2g/4g/8g/16g, 16-bit, I2C/SPI interfaces

- Digital Accelerometer and gyroscope LSM6DS3, 6-Axis, full-scale acceleration range of ±2/±4/±8/±16 g and an angular rate range of ±125/±250/±500/±1000/±2000 dps

- Embedded, programmable 16-bit microcontroller with 32KB memory dsPIC33

- Host USB

- Smart speaker

- Intelligent voice assistant systems

- Voice recorders

- Voice conferencing system

- Meeting communicating equipment

- Voice interacting robot

- Car voice assistant

- Brushless Gimbal BLDC Motor controller

- Other scenarios need voice command

SaraEye in action

SaraEye in action - no more wake words!

Polacy dodali oczy głośnikom. Sara wie, z kim rozmawia.

- start

- Poprzedni artykuł

- 1

- 2

- 3

- Następny artykuł

- koniec